Going forward, we are still going to have short, five-minute-ish episodes on Friday that feature me solo, but we will increasingly be interspersing in inspiring guests. And I won’t be making an effort to have these Friday guest episodes be anywhere near five minutes long — to start, I’m thinking of having them typically be 20 to 30 minutes long, but we’ll see how it goes with the guests and what the reception is like from you.

Read MoreFiltering by Category: Five-Minute Friday

Who Dares Wins

Even if we don’t achieve what we originally set out to achieve, by having dared to achieve it, by having taken action in the direction of the achievement, we learn from the experience and we gain invaluable information about ourselves and the world. Having dared, we find ourselves at a new, enriched vantage point that we otherwise would never have ventured to. From there, whether we achieved the original goal or not, we can iterate — dare again — perhaps to achieve success at the original objective or perhaps we identify some entirely new objective that would have otherwise been inconceivable without having dared.

Read MoreDaily Habit #11: Assigning Deliverables

To ensure that deliverables are assigned, if you’re running the meeting you can formally set the final meeting agenda item to be something like “assign deliverables”. If you’re not running the meeting, you can suggest having this final agenda item to the meeting organizer at the meeting’s outset or even as the meeting begins to wrap up. By assigning deliverables in this way, we not only make the best use of everyone’s time going forward, but we also maximize the probability that all of the essential action items are actually delivered upon.

Read MoreWe Are Living in Ancient Times

This article was originally adapted from a podcast, which you can check out here.

James Clear recently drew my attention to a quote by the writer Teresa Nielsen Hayden that I find fascinating and mind-boggling… and that I also vehemently agree with:

“My own personal theory is that this is the very dawn of the world. We are hardly more than an eye blink away from the fall of Troy, and scarcely an interglaciation removed from the Altamira cave painters. We live in extremely interesting ancient times.”

Read MoreYoga Nidra Practice

Rest and relaxation await as Steve Fazzari joins us this week for a special edition of the podcast! Tune in for a rejuvenating session of Yoga Nidra led beautifully by the expert.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Getting Kids Excited about STEM Subjects

For the fourth and final Friday episode featuring the inimitable Ben Taylor, he provides guidance on how to get kids excited about STEM (science, tech, engineering, math) subjects.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

The A.I. Platforms of the Future

Ben Taylor returns for a third consecutive Five-Minute Friday! This week, he helps us look ahead and dig into what we can expect from the A.I. platforms of the future.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Why CEOs Care About A.I. More than Other Technologies

Ben Taylor is back for another Five-Minute Friday this week, this time to fill us in on why CEOs care more about A.I. than any other technology and how to sell them on your machine learning solution.

Special shout-out to my puppy Oboe who features indispensably in the video version of this episode... on Ben's lap! 🐶

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

How to Sell a Multimillion Dollar A.I. Contract

Starting today and running for four consecutive weeks, Five-Minute Friday episodes of SuperDataScience feature Ben Taylor as my guest. Each week, he answers a specific ML commercialization or education question.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Artificial General Intelligence is Not Nigh (Part 2 of 2)

Last week, I argued that "Artificial General Intelligence" — an algorithm with the learning capabilities of a human — will not arrive anytime soon. This week, I bolster my argument by summarizing points from luminary Yann LeCun.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Artificial General Intelligence is Not Nigh

A popular perception, propagated by film and television, is that machines are nearly as intelligent as humans. They are not, and they will not be anytime soon. Today's episode throws cold water on "Artificial General Intelligence".

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Daily Habit #10: Limit Social Media Use

This article was originally adapted from a podcast, which you can check out here.

At the beginning of the new year, in Episode #538, I introduced the practice of habit tracking and provided you with a template habit-tracking spreadsheet. Then, we had a series of Five-Minute Fridays that revolved around daily habits and we’ve been returning to this daily-habit theme periodically since.

The habits we covered in January and February were related to my morning routine. In the spring, these habit episodes have focused on productivity, and I’ve got another such productivity habit for you today.

To provide some context on the impetus behind this week’s habit, I’ve got a quote for you from the author Robert Greene, specifically from his book, Mastery: "The human that depended on focused attention for its survival now becomes the distracted scanning animal, unable to think in depth, yet unable to depend on instincts."

This suboptimal state of affairs — where our minds are endlessly flitting between stimuli — is exemplified by countless digital distractions we encounter every day, but none is quite as pernicious as the distraction brought to us by social media platforms. When using free social media platforms, you are typically the product — a product being sold to in-platform advertisers. Thus, to maximize ad revenue, these platforms are engineered to keep you seeking cheap, typically unsatisfying dopamine hits within them for as long as they can.

Read MoreOpenAI Codex

OpenAI's Codex model is derived from the famed GPT-3 and allows humans to generate working code with natural language alone. It's flexibility and capability are quite remarkable! Hear all about it in today's episode.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Model Speed vs Model Accuracy

In the vast majority of real-world, commercial cases, the speed of a machine learning algorithm is more important than it's accuracy. Hear why in today's Five-Minute Friday episode!

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Collecting Valuable Data

Recently, I've been covering strategies for getting business value from machine learning. In today's episode, we dig into the most effective ways to obtain and label *commercially valuable* data.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Identifying Commercial ML Problems

The importance of effectively identifying a commercial problem *before* starting data collection or machine learning model development is the focus of this week's Five-Minute Friday.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Tech Startup Dramas

Recently I was hooked on three series: The Dropout (on Theranos), WeCrashed (WeWork), and Super Pumped (Uber). The latter two even feature machine learning, but all three are educational and entertaining.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

Daily Habit #9: Avoiding Messages Until a Set Time Each Day

This article was originally adapted from a podcast, which you can check out here.

At the beginning of the new year, in Episode #538, I introduced the practice of habit tracking and provided you with a template habit-tracking spreadsheet. Then, we had a series of Five-Minute Fridays that revolved around daily habits and we’ve been returning to this daily-habit theme periodically since.

The habits we covered in January and February were related to my morning routine. In March, we began coverage of habits on intellectual stimulation and productivity, such as reading and carrying out a daily math or computer science exercise.

Read MoreDALL-E 2: Stunning Photorealism from Any Text Prompt

OpenAI just released their "DALL-E 2" multimodal model that defines "state of the art" A.I.: Provide it with (even extremely bizarre) natural-language requests for an image and it generates it! Hear about it in today's episode, and check out this interactive post from OpenAI that demonstrates DALL-E 2's mind-boggling capabilities.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.

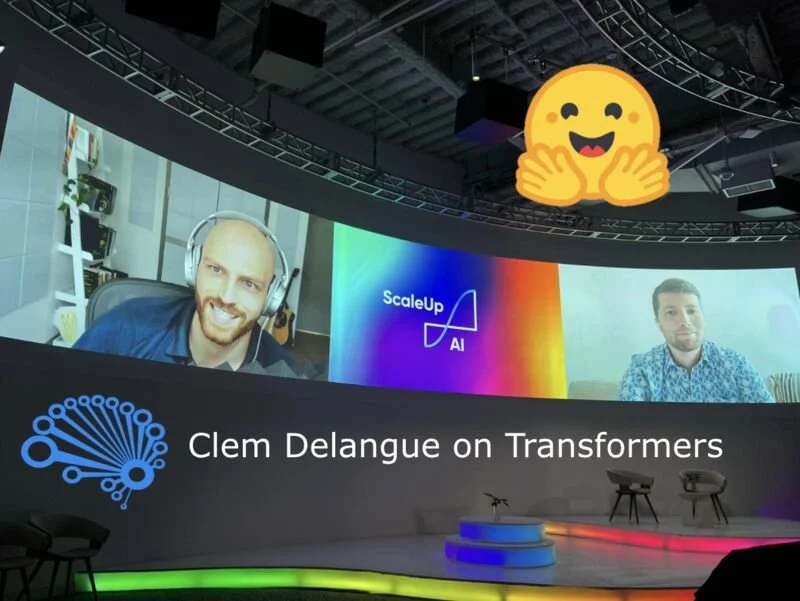

Clem Delangue on Hugging Face and Transformers

In today's SuperDataScience episode, Hugging Face CEO Clem Delangue fills us in on how open-source transformer architectures are accelerating ML capabilities. Recorded for yesterday's ScaleUp:AI conference in NY.

The SuperDataScience show's available on all major podcasting platforms, YouTube, and at SuperDataScience.com.